Accelerating Research

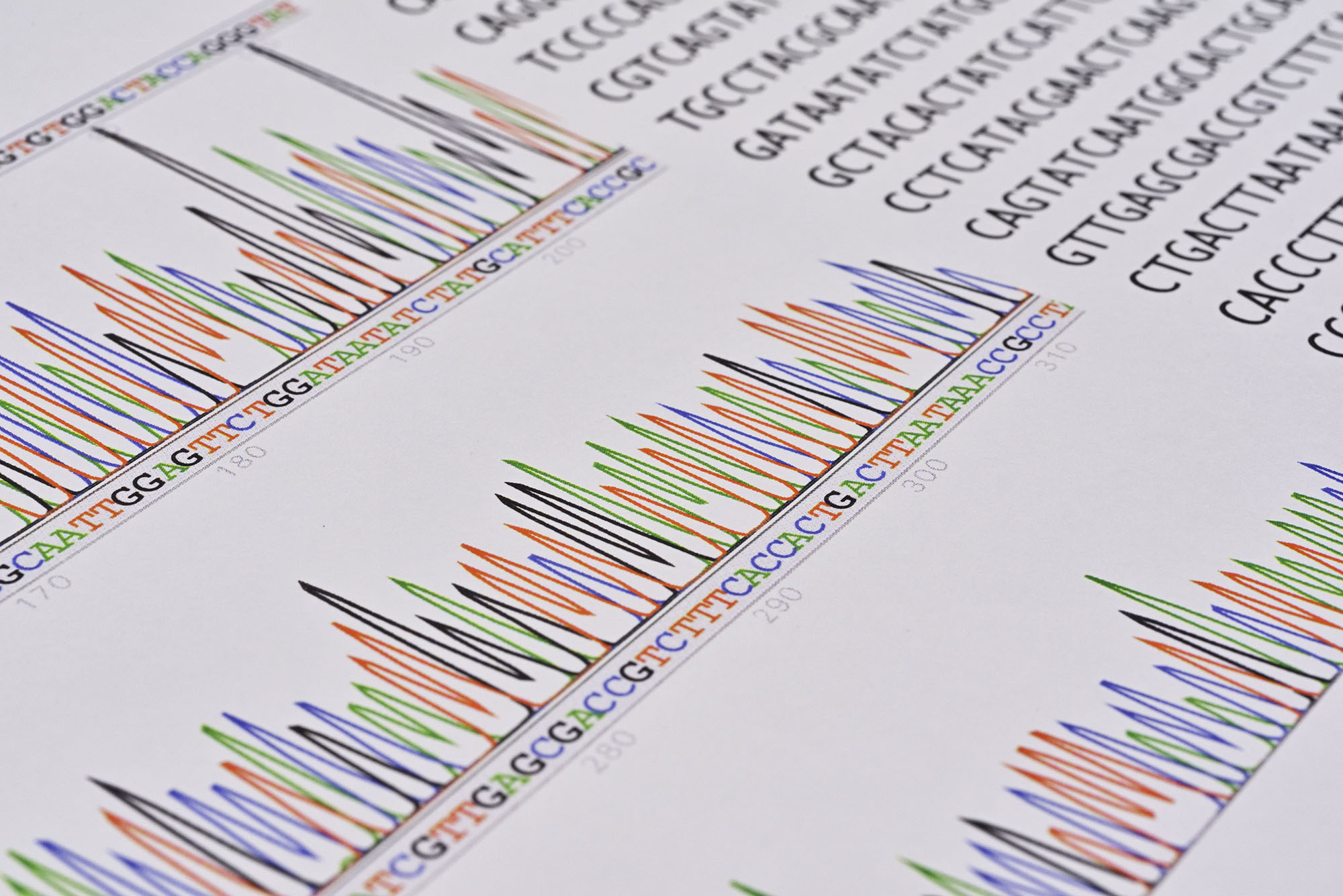

Big data is also supporting innovation by playing an increasing role in fundamental research. This is seen particularly in very large international collaborations such as the Human Genome Project or the experiments at the Cern particle physics laboratory in Geneva as well as in the high-throughput observational and analytical systems used for example in astronomy, satellite sensing or genomics. A growing number of scientific disciplines have established standards for data generation and sharing. The increasing size of datasets – which can be as large as several petabytes – makes it harder to access, store, manipulate and analyse the data in question. This prompts the need for efficient analytical methods and machine learning algorithms.

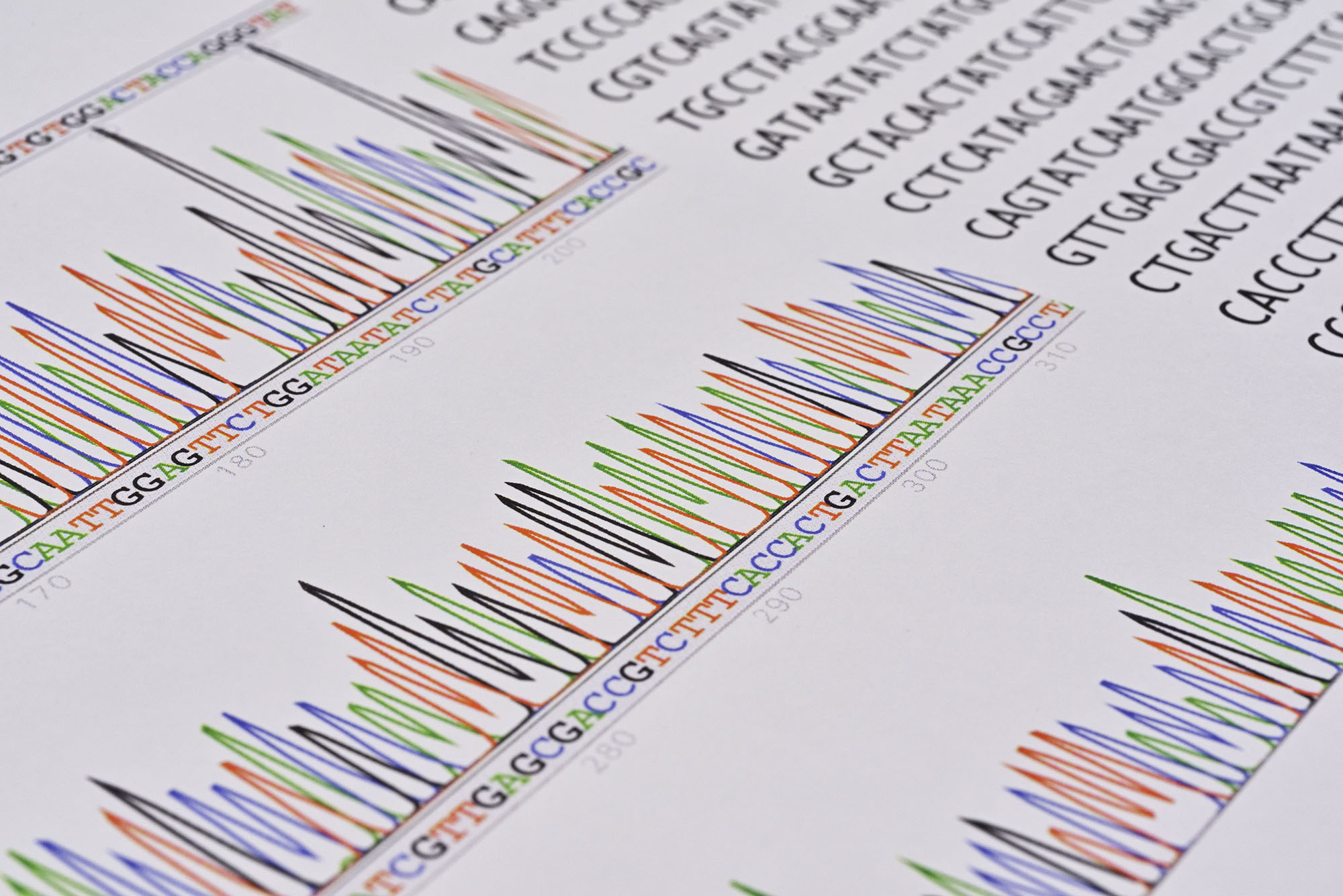

To provide technical solutions to the problem of accessing and working with the ever-growing amount of biological sequencing data.

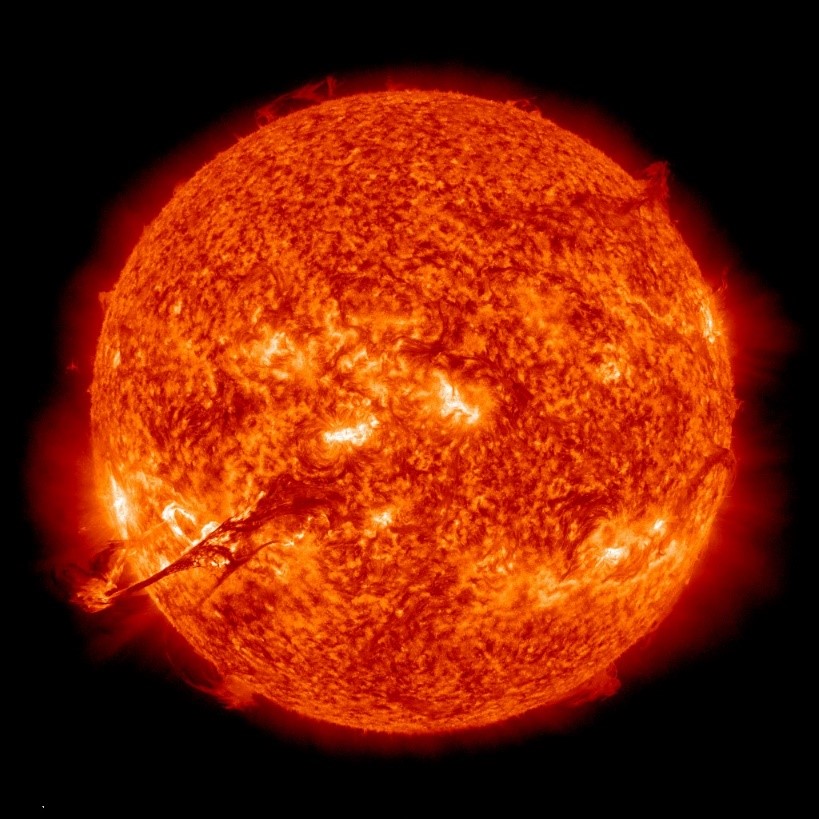

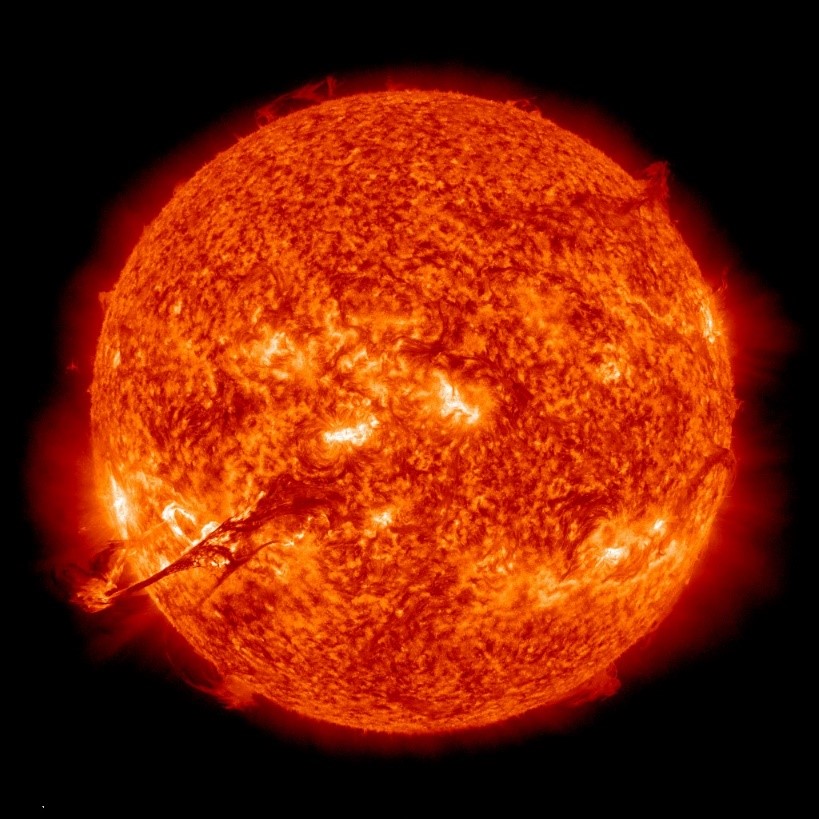

The project helps to gain a better understanding of the physics of the sun and to develop methods for predicting solar eruptions.

The project developed new computational approaches capable of processing genomic data of variable quality to compare the genomes of different organisms.

Interview with Kurt Stockinger, the principle investigator of the project, about results, policy recommendations, and Big Data in general.

Interview with Helmut Harbrecht, the principle investigator of the project, about results, implications and what Big Data means for him.